DHTS Azure HPC Home

The DHTS Azure HPC service is a platform for managing and operating high performance computing clusters. The design of this system allows for compute on sensitive and restricted data types, such as Protected Health Information. The DHTS Azure School of Medicine High Performance Computing cluster (DASH) is one cluster in the system, and is a shared resource for Duke faculty, staff, researchers, and students working with research related to the School of Medicine. A research group can also request a custom build-out of an Azure HPC cluster or other virtual machines for computational analysis with specific choices of VM types and scheduler software.

Getting Help

You can reach the DHTS Azure HPC support team in DASH User Forum and Online Support or by email at groups-systems-hpc-dhts-request@duke.edu.

Quickstart Guide

Follow our Quickstart Guide to learn the basics of connecting to and working in these clusters.

Contents

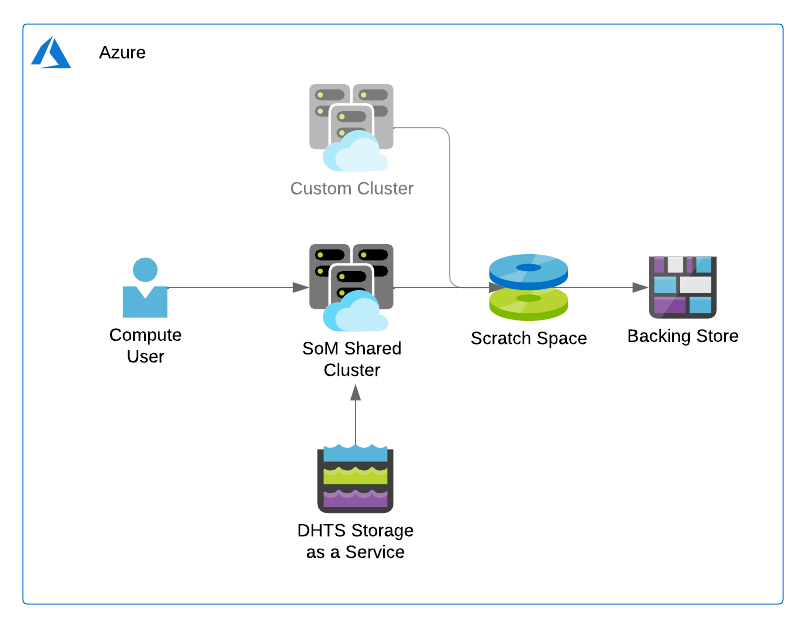

High Level Architecture

Frequently Asked Questions

What are the similarities and differences between the SoM Shared Cluster (DASH) and HARDAC?

The SoM Shared Cluster, DASH, was inspired by HARDAC, which means many things that you are already familiar with on HARDAC will carry over.

Similarities:

- Slurm-based scheduling system

- Large and high performance IOPS shared for

/data(aka "active" data) and/home - Resides in the DHTS network

- Has access to the internet

- Has Singularity, conda and python installed at the system level

- Can run Jupyter Notebooks, RStudio Server, Snakemake, NextFlow and other web-based user software on compute nodes

- SSH access is available from any Duke Health or Duke University network

Differences:

- DASH is based on the Ubuntu Linux distribution instead of the RedHat Linux distribution.

- DASH is composed of two types of nodes:

- Execute nodes have 32 cores and 256 GB of RAM

- Highmem nodes have 96 cores and 672 GB of RAM

- HARDAC is composed of many nodes types, but primarily ones with 32 cores and 256 GB of RAM.

- Nodes in DASH can be always on (24x7) or can autoscale based on the demand of jobs in the scheduler.

- This matches resource utilization to the current load of the cluster and effectively provides more compute availability during peak utilization times.

- It can take 6-12 minutes for a node to autoscale into an available state.

- Currently there are 6 HPC nodes (

somhpc-execute-[1-6]) always running with the rest set to autoscale.

- There is no software module system.

- Due to the wide range of software dependencies that each lab brings to the cluster, it is not possible to support every user with system-wide dependencies.

- Each lab or user should create and mange their own software dependency environment, typically through use of Singularity containers or conda environments.

/dataand/homeare backed by an 128 TiB Azure Lustre file share which are backed up to a Blob container.- HARDAC uses an Inifiniband-based GPFS system for

/dataand/homewhich does not backup data.

- HARDAC uses an Inifiniband-based GPFS system for

/tmpis backed in DASH by the attached SSD storage that comes with each node.- Execute nodes have 1200 GB of /tmp storage.

- Highmem nodes have 3800 GB of /tmp storage.

- Egress from the cluster on ports other than 80 and 443 (i.e. web ports) is blocked.

- The sequencing core's dnaseq3.gcb.duke.edu SFTP server is an exception to this policy to allow sftp of files via port 22.

- Other exceptions can be made to this policy by request.

How much does it cost to use the SoM Shared Cluster?

For now through the end of Fiscal Year 2023 (June 2023) there is no cost to end users for use of DASH.

Can costs be controlled in the SoM Shared Cluster?

Yes, there are upper limits on the number of nodes allowed in the cluster.

What are the differences between scratch space on HARDAC and DASH?

HARDAC nodes are connected with Infiniband and GPFS with ~7GB/s of Read and Write throughput to 1.2 petabytes of storage. For more information, see details here.

The DASH has "active" storage in the cluster using an Azure Lustre file share with 128 TiB of concurrent cache space. This is the ideal location for the heaviest I/O jobs. Lustre periodically moves data to the backend Azure Blob Storage which can hold up to 5 petabytes (5000 TB) of storage.

How much space does our lab have on /data (aka “scratch space”)?

The current cap on scratch space is 5 PB, the limit for an Azure Blob Storage Account. In the future, we may impose lab specific storage quotas as well as term limits for unused data.

Do I have to pay for scratch space in DASH?

In FY23 there are no costs for hosting data in scratch space.

How does /tmp work on Compute nodes? (permissions)

The /tmp directory on each node is symlinked individually to an attached SSD storage that comes with the node, mounted at /mnt. The size of /mnt depends on the node type. You can check the node specs here: DASH - DHTS Azure School of Medicine High Performance Computing. /tmp has a performance advantage over the /data and /home directories as it does not have the many small file write latency. Singularity's fakeroot feature cannot be be run on a shared filesystem such as /data or /home but it can run on a local filesystem like /tmp.

Will my data from HARDAC scratch space be migrated to Azure for me?

Yes, the migration team will migrate your HARDAC scratch space data into the Lustre file share for you.

What scheduler does DASH use?

DASH uses Slurm v.20.11.7.

What operating system will DASH run on?

DASH is currently running on Ubuntu v20.04.

From what computers can I connect to DASH?

You can connect to the scheduler node of the cluster from any Duke Health or Duke University network location. This includes on campus locations and the dmvpn.duhs.duke.edu VPN. If you find that you are unable to connect from an expected location, please send the IP address of the computer to the support team.

How do I put data into the DASH?

Please see the documentation in Getting Data onto DASH.

What changes will we need to make to our software pipelines compared to using HARDAC?

The DASH does not have a software module system, so if your pipeline on HARDAC heavily relies on system modules, you may need to refactor the pipeline to point the dependencies to a user created environment. We recommend users use Singularity containers or create their own conda environment to install all the necessary dependencies.

What happens if I accidentally consume all the resources of the cluster?

You will be a poor neighbor to your fellow researchers, but that is all. Please cancel your job or contact our support team to assist.

Can I put sensitive data or restricted data, such as PHI, on DASH?

Yes, the DASH cluster is the primary location for computing on sensitive and restricted data.

Is there a less secure version of the cluster for non-sensitive data?

No, all clusters in the DASH have the same security posture. The Duke Compute Cluster in Duke Research Computing, provided by OIT, is another great alternative for HPC on non-sensitive data.

Can I use scp to copy files to an Azure HPC cluster?

Yes, you can use scp to transfer files from other Duke network locations directly into the cluster. However, the highest bandwidth option is to move data into the Lustre using the azcopy tool. azcopy also has the benefit of a built-in parallel data transferring feature to expedite large data transfers. Please refer to Getting Data into Azure HPC for details

Why can't I ssh to external computers from the scheduler node?

Outbound port 22 is closed by design with some exceptions. If you have a server on the Duke Network that you would like an outbound connection to, please contact the support team.

Existing Exceptions:

- dnaseq3.gcb.duke.edu

Can I use ulimit as cluster user?

Current open files hard limit is set to 1,048,576 and the soft limit to 524,288 . Any user can increase the soft limit of open files up to the hard limit size.

# check HARD open files limit $ ulimit -Hn 1048576 # check Soft open files limit $ ulimit -Sn 524288 # Increate Soft open files limit (can go up to the HARD limit #) $ ulimit -S -n $number_of_open_files

What software is provided in the base installation of the DASH?

- azcopy v10

- Anaconda 3-2021.05

- SingularityCE v.3.9.5 with fakeroot configuration

- emacs 47.0

- dos2unix 7.3.4-3

- Python 3.8.4

- Python 2.7.17

How do I use Jupyter Notebooks, RStudio, and Nextflow in an Azure HPC cluster?

Please refer to the Using Jupyter Notebook page for detailed instructions on running end user web software on a compute node. You can generalize these instructions for other web software as well.

RStudio Server is best run in a container as it has many system level dependencies. The easiest way to do this is through a Slurm batch job script. See the Using RStudio Server page for more details.

Nextflow is a framework for writing processing pipelines using containers. When using Nextflow on the HPC clusters, keep these helpful tips in mind.

How do I get a user group defined?

DASH uses OIT group manager to create and maintain user groups. If your lab does not already have an OIT policy group, please submit a GetIT request via ServiceNow https://duke.service-now.com/sp?id=sc_cat_item&sys_id=ebe94fa6904d70009de783add0a5d080 and assign it to Systems-HPC-DHTS.